The Algorithm

The Generative Adversarial Network I chose has been developed by Tero Kerras et al. and implements a progressive training which greatly reduces the training time and therefore overall cost of running the GAN. Find out more about the algorithm in the paper linked below.

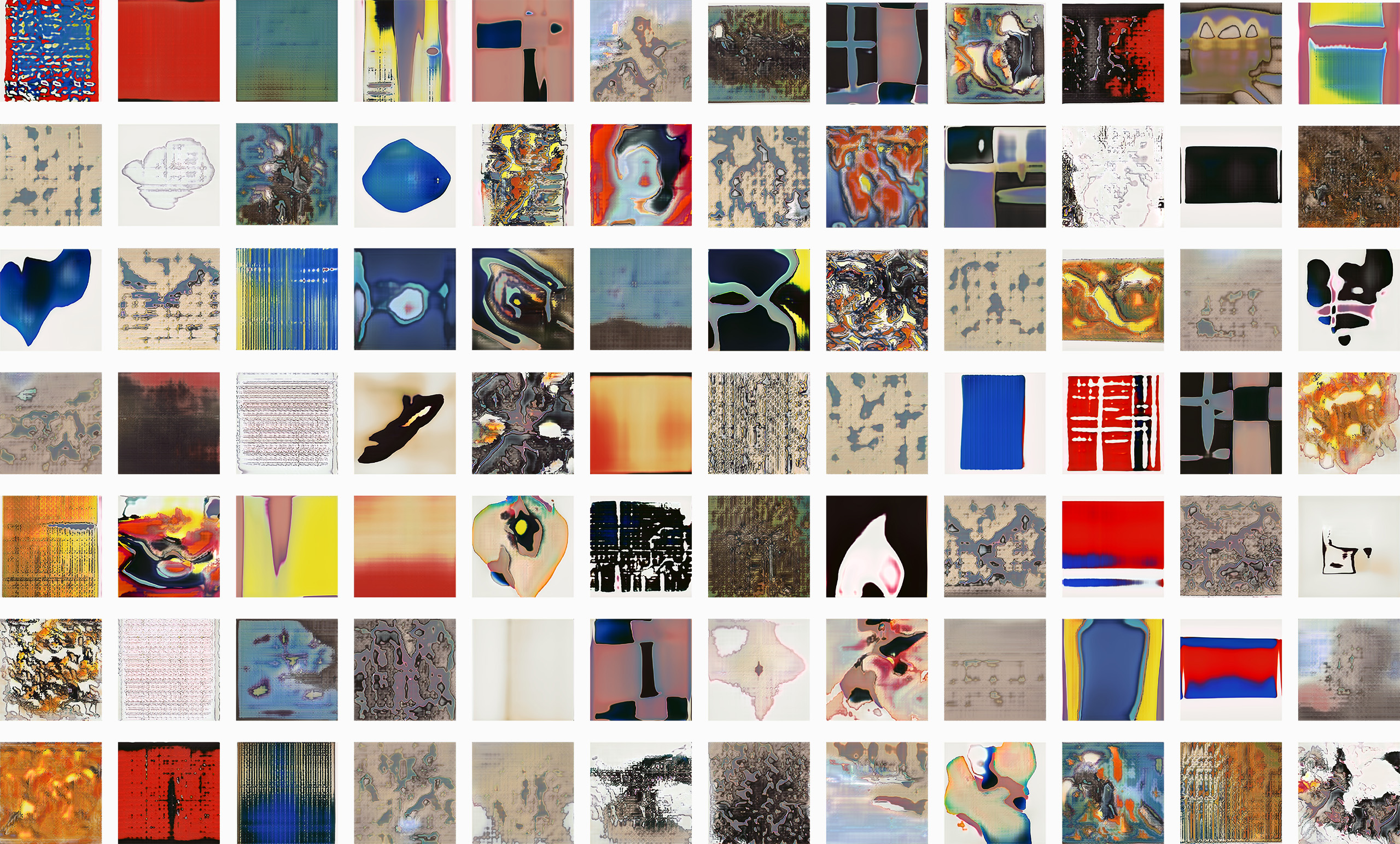

A random series of pictures dreamed up by my computer.